Perhaps no topic has had more ink spilled by biobloggers – especially if you include electrons dripping out of laptops – than the tyranny of the Journal Impact Factor. A metric designed by Eugene Garfield to help librarians select journals, the IF has been routinely abused for purposes never intended. How can we reverse this process and reclaim the literature for those who publish research, evaluate research for hiring and tenure decisions, or fund research?

The wrongful uses and misunderstandings of the IF are legion. A measure of a journal’s overall citation rate, it is commonly misapplied to individual articles or—even worse—to the individual authors of those articles. The IF can be heavily influenced by even a single highly cited article, which can push a journal into an impact realm where it doesn’t belong; other sides of this bias are that most articles in a journal accrue considerably fewer citations than the IF would suggest and a small fraction of articles attract the bulk of a journal’s citations. Journals can manipulate their IF. For example, publishing more reviews tends to increase it. And the IF with its three decimal places gives an unwarranted impression of accuracy. Citation patterns are heavily influenced by discipline; more researchers, for example, cite work on cancer than on Chlamydomonas.

My favorite confusion of the IF comes from Eugene Garfield himself. He writes, “of 38 million items cited from 1900-2005, only 0.5% were cited more than 200 times. Half were not cited at all…”. Think about that for a second: half of the cited items over a 105 year period were not cited.

So why does nearly every grad student and postdoc – not to mention a lot of PIs – seek to publish in journals with the highest IF? And given that the majority of journals with the loftiest scores are edited by professional rather than academic editors, why do we relinquish so much of our evaluative responsibility to those with some of the least experience in doing science? Why do we sustain the vicious cycle that results when we send our best work to journals with highest IFs, which allows them to maintain their standing, which leads people evaluating scientists to use journal names as a proxy for the quality of research?

I have heard only one rationale for this situation that makes sense, albeit twisted sense: any postdoc who has persisted through the entire process—the convince-the-editor-to allow-the-manuscript-to-go-out-to-review process, the argue-with-the-editor-that-the-negative-review-is-not-relevant process, the rally-the-troops-to-get-the-work-done-for-the-revision process, the wear-down-reviewers-and-editor-in-the-endgame process—to wind up with a publication in one of the famed one-word journals shows the grit and guts necessary to succeed as a faculty member, regardless of whether or not their publication makes a valued contribution.

Tame measures have been proposed for replacing a lame measure with the same sort of measure: instead of IF, let’s use a score based on how often an individual article is cited; or a score based on how an author’s n papers have been cited at least n times; or a score based on how often each journal cites each other; or some other complex score. My favorite alternative is that we give jobs and grants and tenure to those scientists whose articles have created the most internet buzz, as scored by a decibel meter. While any of these (save perhaps the last) might be a marginal improvement, they hardly solve the problem.

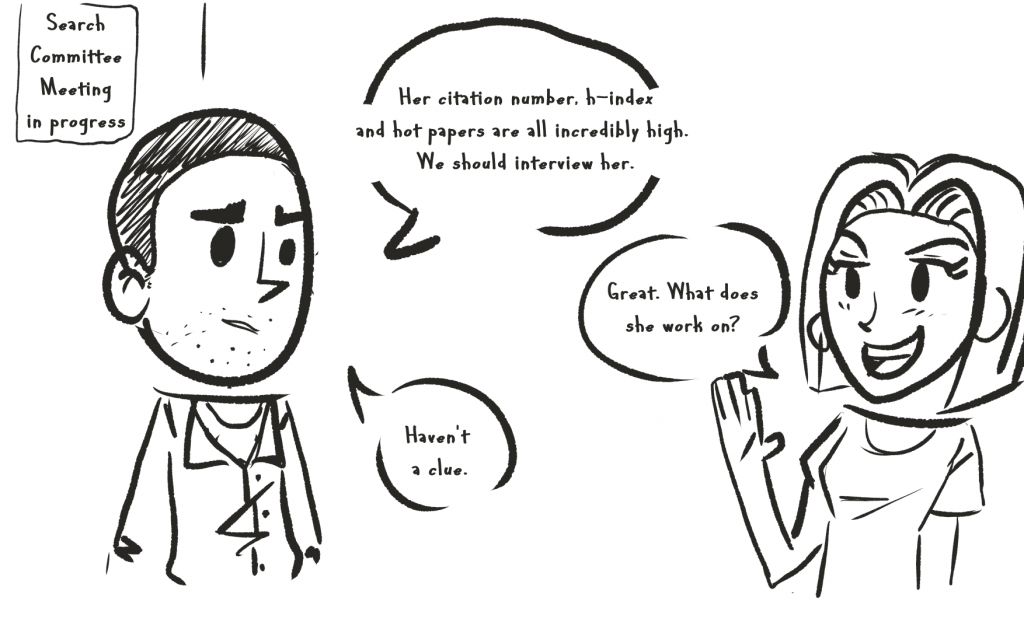

The simplest solution is that those who evaluate science simply read the papers of those being evaluated and make their own decisions about the quality and significance of the work. Search committees might dodge this obligation by pleading they have too many applicants. Study section members get less of a pass because they are reviewers of a limited number of grant applications. Those asked to comment on the suitability of a candidate for tenure get no dispensation for ignoring the content of the publications of the one person they are asked to consider. But I fear that those who would avoid reading the literature will avoid reading the literature. Now what?

I propose a two-part solution: half standard, half structural. The standard: instead of providing the usual CV that lists all publications, biologists should be allowed to list only a very few, perhaps five. For each, they provide a summary of why the research is significant, how it advanced its field, how it was not duplicated by the work of others, and what their specific role in the work was. The structural: they list each publication only by article title and authors, and leave off the journal name, volume and page numbers. In fact, the NIH could readily put a crimp into the IF by simply not allowing journal names to appear in the biosketches of grant applicants; universities could follow suit by not allowing journal names in the CVs of job or tenure candidates.

Of course, this no-names policy might be circumvented by a reviewer who insists on searching out the missing journal names. But perhaps the reviewer was already seduced by the persuasiveness of an author summary to give the applicant a second look, even to the point of reading an article (provided, of course, in manuscript format without obvious journal affiliation). Maybe other committees circumvent the policy by hiring an underling to do a PubMed search to ferret out the journal names of every article in every CV submitted. If that occurs, then PubMed won’t be allowed to list journal names. If this is still insufficient to ensure journal anonymity, then the journals won’t be allowed to use their names; each will be identified by the sum of the digits in its IF times a random number, which will change daily.

In the end, the only criterion of significance is the work itself, and we all know lots of significant work that appeared in multi-word journals and the converse for single-word journals. If we can’t figure this out without IF figures, then we deserve the wrong faculty we hire, the wrong faculty we fund, and the wrong faculty we tenure. The next time someone tells you that a biologist must be talented because she published in [one-word journal] and [another one-word journal], say that you read those papers and they were garbage (don’t worry if they happened to be exceptional). If we all say this enough times, maybe we’ll get our colleagues to refer to the research rather than its unjustified proxy.